Today, I had the exciting opportunity to virtually attend OpenAI’s inaugural DevDay conference by watching the livestream on YouTube. As a freelance software engineer and avid user of OpenAI’s APIs, I was eager for a front-row view of the latest AI innovations from this leading artificial intelligence lab.

The biggest announcement for me was OpenAI’s brand new text-to-speech (TTS) technology that was unveiled on stage. As soon as I heard about it, I knew I had to try building something with it myself. I’ve always been fascinated by speech synthesis and the possibilities it unlocks for more natural human-computer interaction.

But TTS wasn’t the only groundbreaking reveal. Here’s a quick rundown of some other new models and products announced:

-

GPT-4 Turbo: A next-generation AI model featuring a 128K context size, improved accuracy, more control features like reproducible outputs, and reduced pricing.

-

New modalities: Vision capabilities for GPT-4, DALL-E 3 image API, and the previously mentioned TTS technology.

-

Assistants API: For creating customized AI assistants capable of calling functions, leveraging knowledge retrieval, and interpreting code.

-

GPTs: Tailored mini AIs built on top of ChatGPT that combine custom instructions, knowledge, and actions.

During the keynote, OpenAI CEO Sam Altman demonstrated the new TTS model by having it read a short scenic passage. The computer-generated voice was impressively realistic and expressive, with a smooth tone, clear pronunciation, and a natural delivery—unlike the robotic voices characteristic of older text-to-speech systems.

According to Altman, developers now have access to six unique voices to choose from, each with support for multiple languages. After hearing this, I couldn’t wait to get my hands on the API and start experimenting.

Once the DevDay livestream concluded, I immediately got to work. After acquiring an OpenAI API key, I began experimenting and quickly had a simple Python script capable of converting text to speech. Hearing my own words echoed back to me through the API was like witnessing magic!

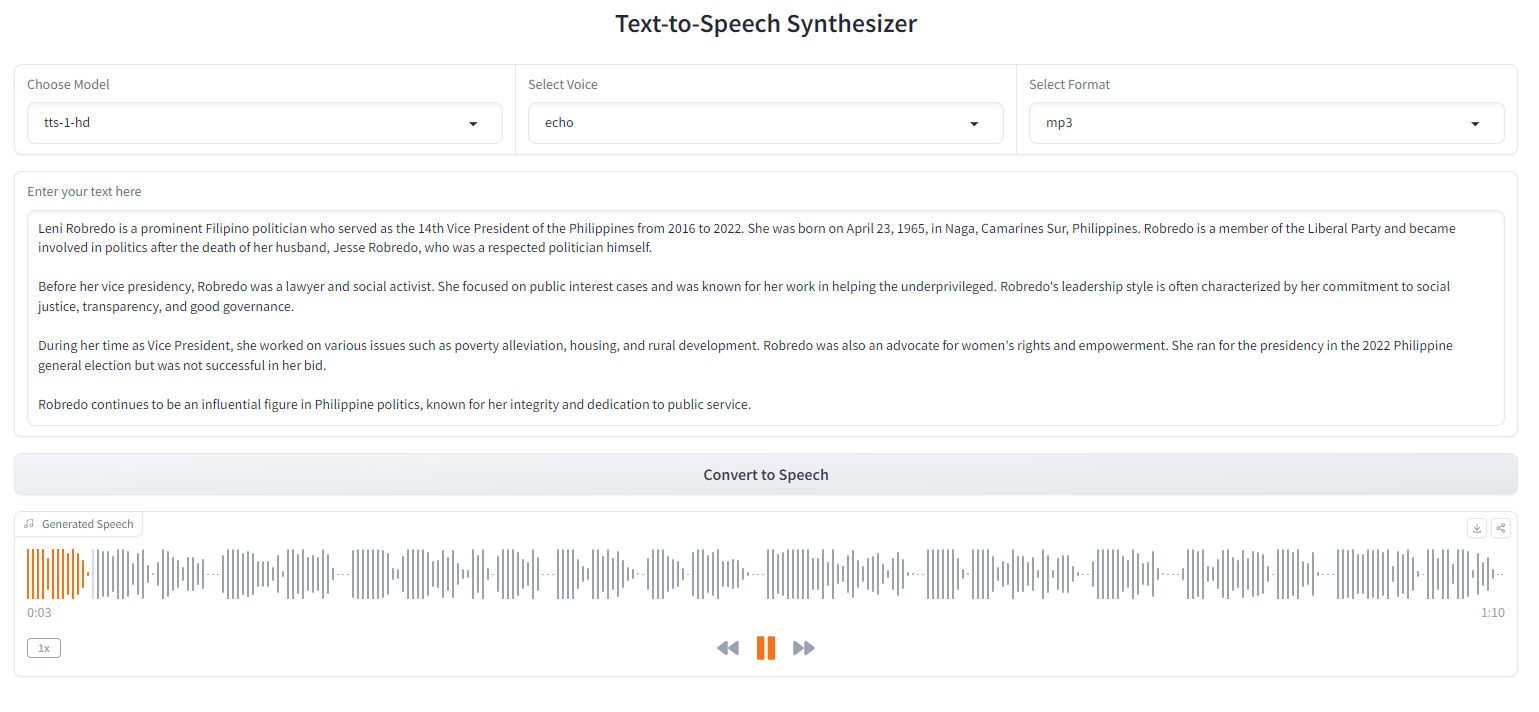

Next, I focused on incorporating this text-to-speech functionality into an accessible web interface. This led me to build a demo using Gradio, an open-source Python library that simplifies the creation of GUIs and demo apps for those without web development experience.

import gradio as grd

import os

import tempfile

from openai import OpenAI

# Initialize OpenAI client with API key

api_key = os.getenv('OPENAI_API_KEY')

os.environ['OPENAI_API_KEY'] = api_key

openai_client = OpenAI()

def synthesize_speech(input_text, selected_model, selected_voice, audio_format):

# This is a new feature from OpenAI, so please check the documentation for the correct parameter to set the audio format.

# See: https://platform.openai.com/docs/guides/text-to-speech

audio_response = openai_client.audio.speech.create(

model=selected_model,

voice=selected_voice,

input=input_text

# Add the correct parameter for audio format here, if available

)

# Determine the file extension based on the selected audio format

file_extension = f".{audio_format}" if audio_format in [

'mp3', 'aac', 'flac'] else ".opus"

# Save the synthesized speech to a temporary audio file

with tempfile.NamedTemporaryFile(suffix=file_extension, delete=False) as audio_temp:

audio_temp.write(audio_response.content)

audio_file_path = audio_temp.name

return audio_file_path

# Define the Gradio interface

with grd.Blocks() as speech_synthesizer_interface:

grd.Markdown("# <center> Text-to-Speech Synthesizer </center>")

with grd.Row():

model_selector = grd.Dropdown(

choices=['tts-1', 'tts-1-hd'], label='Choose Model', value='tts-1')

voice_selector = grd.Dropdown(choices=[

'alloy', 'echo', 'fable', 'onyx', 'nova', 'shimmer'], label='Select Voice', value='alloy')

format_selector = grd.Dropdown(

choices=['mp3', 'opus', 'aac', 'flac'], label='Select Format', value='mp3')

input_field = grd.Textbox(

label="Enter your text here", placeholder="Type here and convert to speech.")

synthesis_button = grd.Button("Convert to Speech")

audio_result = grd.Audio(label="Generated Speech")

input_field.submit(fn=synthesize_speech, inputs=[

input_field, model_selector, voice_selector, format_selector], outputs=audio_result)

synthesis_button.click(fn=synthesize_speech, inputs=[

input_field, model_selector, voice_selector, format_selector], outputs=audio_result)

# Launch the interface

speech_synthesizer_interface.launch()In just a few lines of code, I was able to set up a dropdown selector for choosing voices, a textbox for input text, and an audio player to preview the generated speech. I published this demo on Hugging Face Spaces to share with others. The full source code is available on GitHub.

Download the sample audio file here.

Download the sample audio file here.

The quality of the voices is impressive; they sound incredibly natural, with appropriate cadence, inflection, and emotion. The audio clarity is also excellent. I’m impressed by how much OpenAI has advanced beyond previous TTS models.

When building my synthesizer app, I valued the ease with which I could switch between audio formats such as MP3, AAC, Opus, and FLAC. This versatility is beneficial for optimizing latency and audio quality depending on the use case—for instance, Opus is excellent for real-time voice applications.

I observed that the standard TTS model (“tts-1”) is better suited for low-latency scenarios, while the HD version (“tts-1-hd”) excels when high audio fidelity without lag is paramount. However, the quality difference is often negligible.

The DevDay keynote highlighted innovative TTS applications, such as enhancing accessibility for visually impaired individuals, enabling multilingual voice interfaces, and creating more natural conversational agents.

As a developer, I’m thrilled by the potential this technology unlocks. TTS could be used for narrating audiobooks, producing podcasts, developing voice assistants, or generating real-time audio announcements in public venues. The possibilities are limitless.

This release marks one of the most thrilling developments from OpenAI for me. As a dedicated software developer and a podcast enthusiast, I believe the improved TTS will be transformative. The new API was remarkably easy to use, a stark contrast to the complexity one might expect when delving into such advanced technology.

The integration of TTS capabilities into OpenAI’s broader platform is a significant advancement. DevDay showcased assistants that can chat, interpret images, process data, and vocalize responses. The convergence of these modalities is where true innovation lies.

Watching the livestream in my pajamas, learning about the latest OpenAI research and advancements, was an absolute delight. It was extraordinary to hear from the creators of these transformative technologies, even though it was just me in my bedroom, captivated by my laptop screen.

One thing is certain: OpenAI is dedicated to enabling developers to create safe and beneficial AI applications. They are carefully and responsibly introducing new capabilities.

Being part of the OpenAI community during this AI revolution is a dream come true. The future innovations they will unveil are something I look forward to with great anticipation. If the rapid progress to date is any indication, the amazing demos we witnessed are just a glimpse of what’s possible as AI assistants become increasingly sophisticated and multimodal.

If DevDay is any indication, the pace of AI research is accelerating, driven by organizations like OpenAI pushing the envelope of machine capabilities. I am proud to contribute to this mission by developing applications that harness their technology for the greater good of humanity.